Copyright © Christian Pasquel. All Rights Reserved.

10 min

2023

#Bot #GPT3 #Twitter #AI

Conception

I've always liked the idea of transforming data into something else. I've done that with generative art, as with the HashCubes I produced using Bitcoin data, or the demonic sounds I did for The Unperson Project with my friends and amazing artists Susana Moyaho and Andrea Tejeda. So when I started to experiment with GPT-3 completions, one of my first data sources was Bitcoin data. I connected Wolfram Language to Open AI API and made a small program for GPT-3 to describe Bitcoin blocks.

GPT-3 is an amazing tool but, of course, it's not perfect. Sometimes it makes mistakes and provides incorrect information. My approach to handle this is humor. And this is actually inspired by the novel The Moon is a Harsh Mistress, the book that introduced me to Robert Heinlein. I won't spoil you the book but it starts with HOLMES IV (Mike) and Manuel (Mannie), the two main characters of the story. Mike is a computer that has achieved self-awareness and Mannie is the technician who discovers that Mike is conscious. One of their first interactions is when Mike asks Mannie to describe humor to it. Mannie tried some techniques that, surprisingly, are similar to how neural nets are trained in modern times (the book is from 1966). Eventually Mike develops a sense of humor.

So what I thought was…if GPT-3 doesn't always give me accurate information, what about if I ask it to add some humor to it? In that way if it messes up, it's not so critical because the idea for this is to produce something funny, even ridiculous. And that doesn't apply just to GPT-3 limitations but to my own as well. My code is not perfect or the APIs I use are not perfect either, but I know how to handle errors so, if there's an error in my code or the service, I can handle it with humor too.

Finally, I wanted this bot to have its own personality and aesthetics so Dani Situ (who I can't thank enough for this and all credit goes to her) came up with the super cute logo for the Block Narrator:

This became the Block Narrator bot

Development

My first experiment connecting GPT-3 to Bitcoin data is described in this Twitter thread. That's the embryonic program for what became the Block Narrator.

I started by identifying the Bitcoin data I wanted GPT-3 to work with. I used Wolfram Language for that, in particular, the BlockchainBlockData function. I worked at Wolfram Research for 10 years and Wolfram Language became one of my main development and computational exploration tools and still is. I'm no longer affiliated with Wolfram but I learned a lot from my time there and from Stephen Wolfram himself so I'm happy to mention when I use their tech.

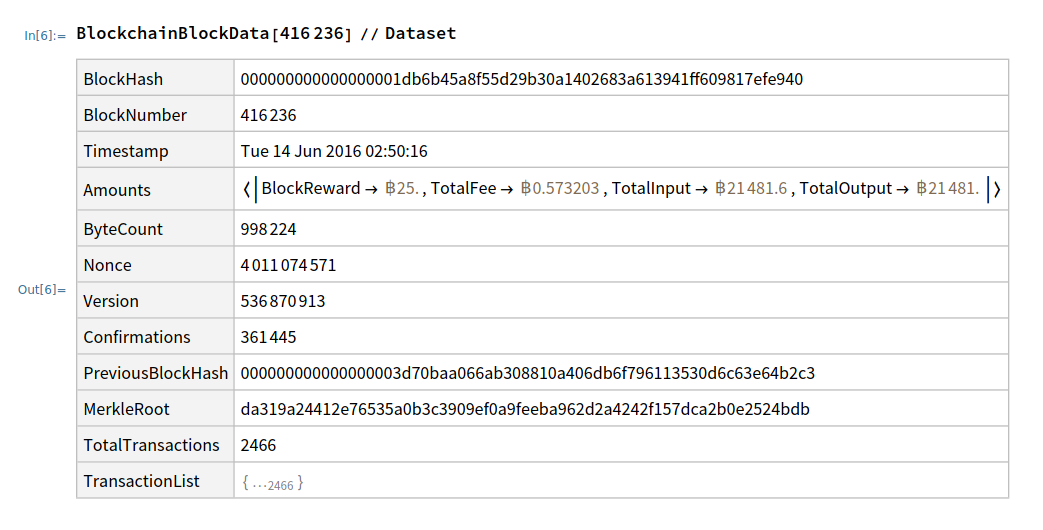

With BlockchainBlockData you can easily connect to the Bitcoin blockchain (and other blockchains) and extract, in this case, computable block data that looks something like this:

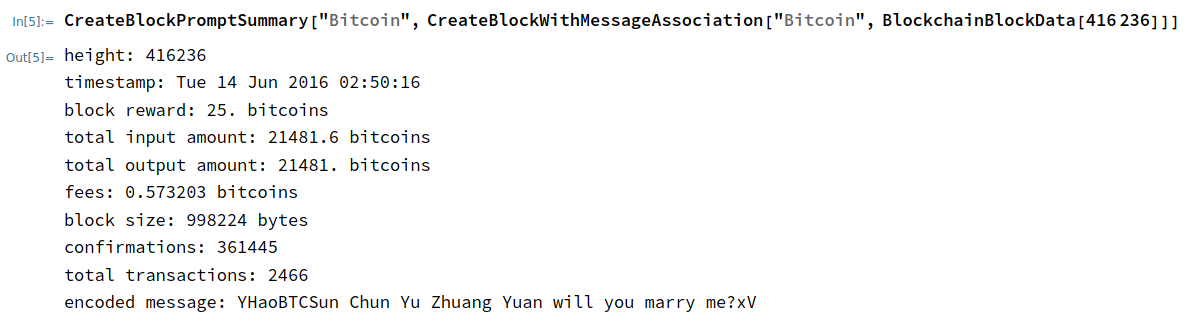

Now, with a bit of data processing and string manipulation, it's very easy to go from that dataset to something that looks like this:

Which is just a string formatted as a paragraph that will be sent to GPT-3 for it to describe it. Here I included the basic info about a block but I also added an "encoded message" which is just the message some miners include in the coinbase transaction. These messages are typically just the name of the mining pool or something similar, but there are other blocks like block 416236 where the miner included something more interesting such as the marriage proposal in this block. The messages are included in the input of the coinbase transaction. They are a hexadecimal string so you need to convert that to decimal and map the codes to characters to reconstruct the message. There are more creative ways to store data in Bitcoin blocks. I actually made one technique that I haven't seen used by others but I'll write about that in another post.

Anyway, I converted this block-data-to-paragraph program to an API using APIFunction, which is a super useful function to create APIs. I literally can create APIs with it while holding my breath. Going off on a tangent here but I think there should be livecoding events where livecoders hold their breath and code stuff. I deployed this to the Wolfram Cloud and now I have a RESTful API that receives a block height and returns a paragraph with the data I need.

My bot needs to send this to GPT-3 for it to produce a description. Doing that is very easy. You just need to use the Create Completion endpoint of OpenAI API:

POST https://api.openai.com/v1/completions

Example using curl:

curl https://api.openai.com/v1/completions \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"model": "text-davinci-003",

"prompt": "your prompt",

"max_tokens": 7,

"temperature": 0.8

}'

Where you need to set model to text-davinci-003 and a temperature. I'm using 0.9. Use the prompt parameter to send your prompt. Don't forget that you need an API key that is included in the Authorization header.

The API will return (inside the choices field) the text the bot uses to create the tweets. I get a text like this one:

"Bitcoin block is like a treasure chest full of digital coins. It's got a big shiny lock on top, and the key to unlock it is the unique Hash. The Height of the block is like the number of floors in a tall building, and the Timestamp is like the time the block was created. It's super valuable, with 25 Bitcoins inside, which is the Block reward. If you add up all the inputs and outputs, you'll get 21481.6 Bitcoins, which is like a mountain of gold coins. The Block size is like the size of the chest, and it's pretty hefty at 998224 bytes. To make sure the block is secure, it needs Confirmations, and this block has 360355 of them!"

Now, I needed to rasterize the text and overlay it into a template image Dani made that looks like this:

For it I used this Python code written on top of the PIL library. Once I polished the distances between the text and images I got something like this:

The last step is to tweet that. I do that using the Tweepy Python library. I deployed all that to my Ubuntu server and scheduled a cronjob to run at 3:33AM and 3:33PM.

I added more stuff like error handling and error logs in case an API is down or something goes wrong. In the first version of this bot the errors are quiet in the sense that the Block Narrator doesn't communicate to the external world if something is wrong (just to me) but I'm progressively making it more verbose so it can communicate to people if something didn't work correctly and also add some humor to it.

This looks very straightforward but I ran into some issues I want to describe here.

Parsing the coinbase transaction

Many messages included in the coinbase transaction contain "garbage", i.e. text that is not human-readable and surround the actual readable text. These are some examples:

\.03-Ü\.0b\[RawEscape]Mined by AntPool958\.1c\.00T\.02a\.10ä«ú¾mm\

\[Micro] \

$¹hkþ\"\.b3\[Divide]sãCîå9x|\.11Í]}YtþÌ\.02\.00\.00\.00\.00\.00\

\.00\.00î\[RawEscape]\.00\.00\.00\.00\.00\.00\.00

\.03ÐÒ \.0bú¾mmÐ\[Paragraph]òJéÈÄ Æ>Ý4É®ê`ï¼ÁOº`öqëG \.00 \.00 \

\.00 \.00 \.00 \.00 \.00 \.03e \.08 \.00\[Degree]t»,\.00 \.00 \.00 \

\.00 \.00 \.00:þÃ \n/slush/

I don't want to send garbage to GPT-3 so I needed to clean up the messages. I'm sure there's a better way to do this but my solution was to use this regex to filter weird characters::

[^A-Za-z0-9\\?\\s]|[\\ x00-\\ x1f]

Hey if you have a better solution, please let me know!

Tweet from multiple accounts

I saw this is a common problem for people using Twitter's API to develop bots, however, there's no super clear answer so I hope I can provide some insight here.

The problem is: you should only register one Twitter Developer Account which may be linked to your personal Twitter account (which I understand is a common case. It was my case as well). From the Twitter Developer Portal you can get all the data you need to tweet using the API, i.e. keys, tokens, secrets, etc.

However, the tokens only work for the Twitter account linked to the developer account. I didn't want the bot to tweet from my personal account, I wanted to tweet from the @BlockNarrator account and I didn't want to open a new developer account for it. Not only it's super impractical if you plan to eventually deploy many bots but it's also against Twitter's terms of service.

So what you need to do is give permissions to the bot account to tweet using the app you registered with your developer account. It's not an actual "app", it's just how you register a connection to the Twitter API.

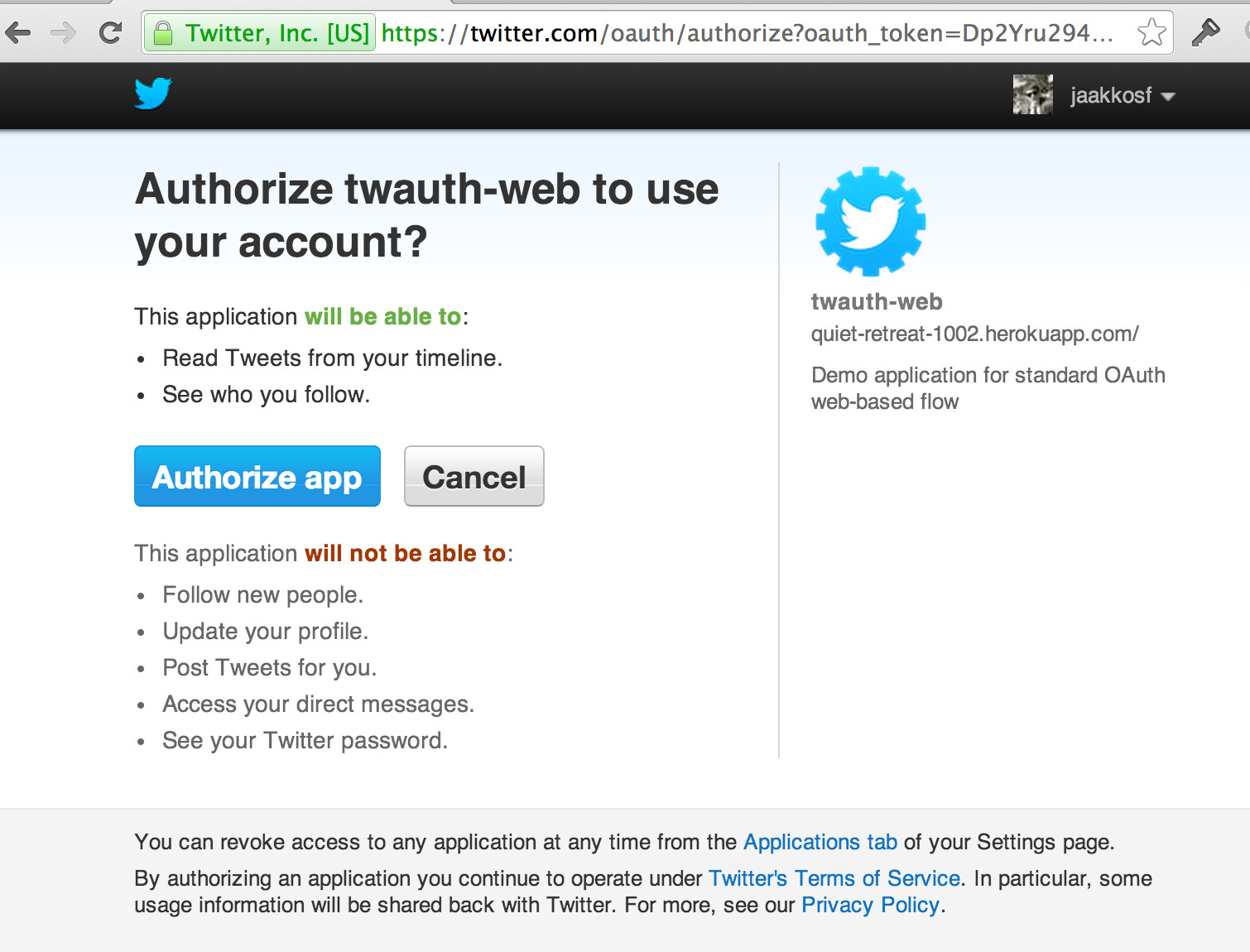

The most practical way for me to do this was to follow the 3-legged OAuth flow using a PIN-based authentication. Sounds weird right? Don't worry, I got you covered.

What all this means is that you need to create a link that will show a screen like this one:

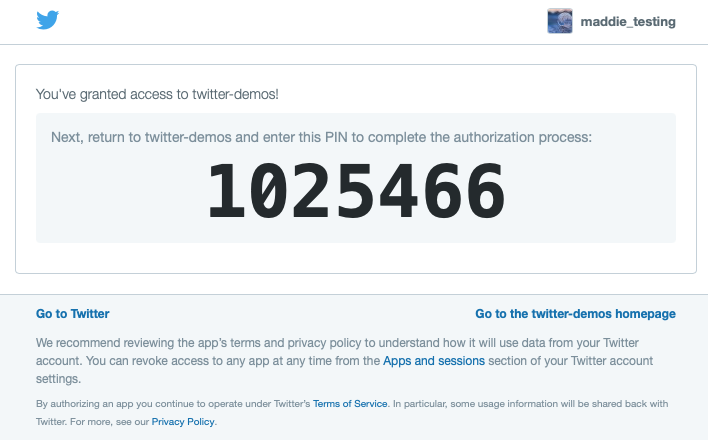

You need to be logged in with your bot account (or the account that you will use to tweet via the API) and press the Authorize app button. This will take you to another screen with a PIN that looks something like this:

And with that PIN you can create the tokens you will use in your scripts to tweet from another account.

OK…but how do I actually do all that?

I have a script for that of course. I just wanted you to first understand the concept of what the script will do for you. Here's the Python script:

import tweepy

#Use this to give access to other bot accounts

# Instructions:

# 1. Log in to your bot's Twitter account.

# 2. Fill in the lines below for the consumer key and secret.

# 3. Run this script.

# 4. Click on the link that the script produces, then click "Authorize App" (Make sure you are logged in to your bot's Twitter account!).

# 5. Copy the code shown on the web browser and paste it into the console running this script and press enter.

# 6. The auth key and secret for your bot's account should be shown. Use them in your other Tweepy script!

# Ref: https://gist.github.com/CombustibleToast/0e175a174d3666fff0c498097f021e01#file-pinbasedbotauth-py

# Ref: https://developer.twitter.com/en/docs/authentication/oauth-1-0a/obtaining-user-access-tokens

# From your app settings page

CONSUMER_KEY = "{Twitter API Key}"

CONSUMER_SECRET = "{Twitter API Key Secret}"

oauth1_user_handler = tweepy.OAuth1UserHandler(CONSUMER_KEY, CONSUMER_SECRET,callback="oob")

#auth.secure = True

auth_url = oauth1_user_handler.get_authorization_url()

print('Please authorize: ' + auth_url)

verifier = input('PIN: ').strip()

access_token, access_token_secret = oauth1_user_handler.get_access_token(

verifier

)

print("ACCESS_KEY = '{}'".format(access_token))

print("ACCESS_SECRET = '{}'".format(access_token_secret))

In that code, you just need to change the CONSUMER_KEY and CONSUMER_SECRET variables. Those are just funny names for API Key and API Key Secret. Those are the actual names used by Twitter API. You get them in your Developer Portal when creating an app.

So when you run that code you will get a URL (produced by the print('Please authorize: ' + auth_url) line).

Copy and paste that line into your browser and be sure you are logged as the actual account that will tweet (i.e. the bot account). Authorize the app. You'll get to the PIN screen. Copy that and paste it into the terminal or notebook you are using to run the Python script. It should be waiting there for you to provide the PIN. Once you enter the PIN, you will get the authentication tokens. These are similar to the tokens you get in the Developer Portal, however, these tokens are for the account you will use to tweet, in this case, it's not the same account linked to the developer account.

These tokens should not expire so no need to request them again. If you create them again, the tokens you previously generated may become invalid. Store the set of tokens you just created in a secure place. These are the tokens you will use with the Tweepy library. If your tokens, account or app are compromised, you can always revoke permissions.

I hope I saved you some time if you had the same problem. If this didn't work for you or still have questions, just contact me.

Image composition

The original design Dani produced for the Block Narrator's tweets was like this one:

There, we use two different fonts: one for the text and one for the double quotation marks. That was Dani's idea.

However, when I started to code this I ran into the issue of placing the double quotation marks in the precise spot at the beginning and end of the text. That was super hard for me to do in Python. It's much easier to achieve with Wolfram Language using ImageCompose and Style but I wanted to use Python for this.

Also, I didn't want to spend hours on this problem so I came up with another solution. It's easy to compute the vertical coordinate at which the text ends but it's very hard (for me) to compute the horizontal coordinate. And I need to do this because the two different fonts. I need to first rasterize the main text, then rasterize the opening quotation marks, then rasterize the closing quotation marks and merge everything together. It's not like just appending a character to the end of the text.

Since it was just easier for me to compute the vertical coordinates, I placed the double quotation marks as this:

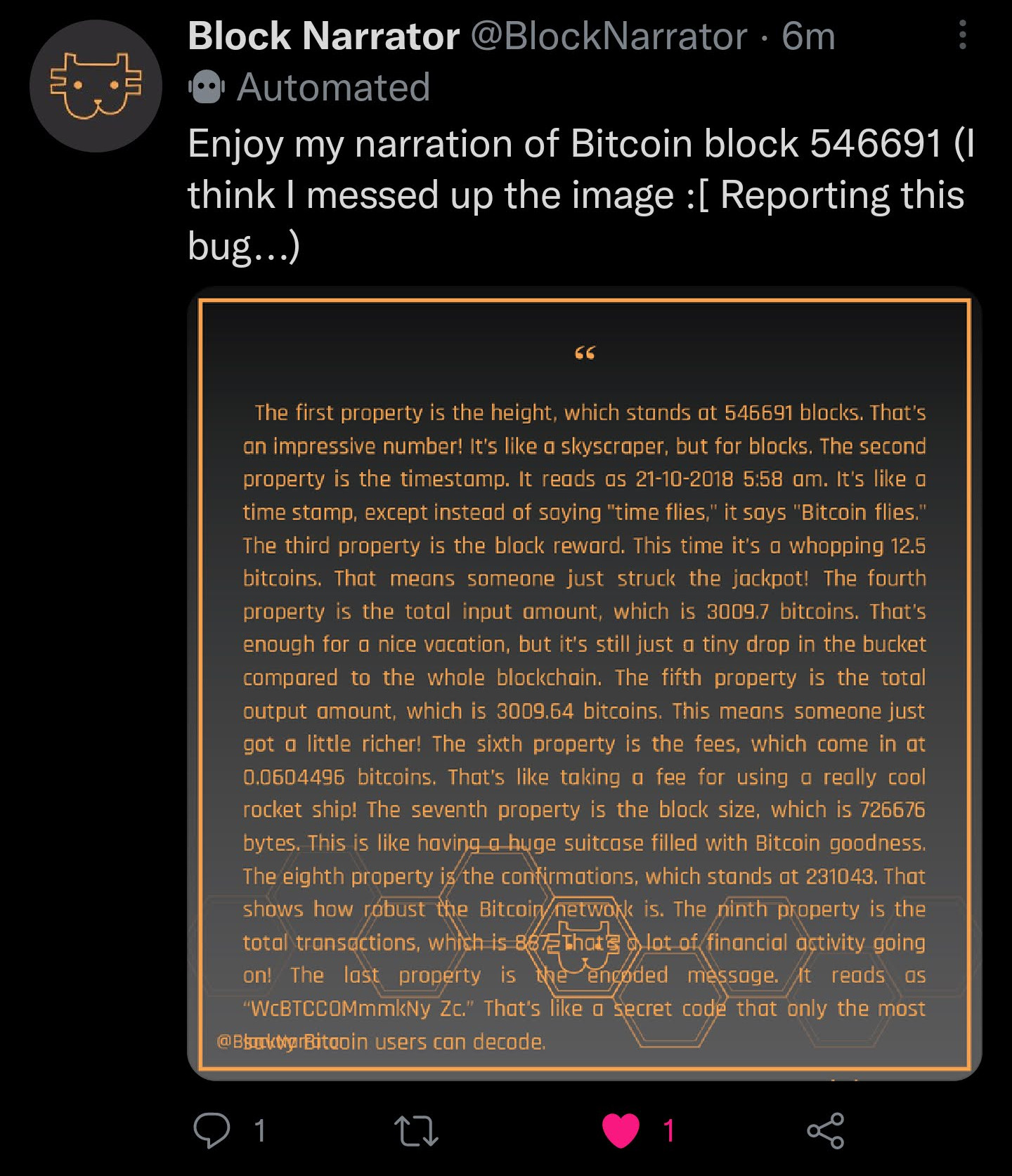

Which looks nice! However, I still have a (yet unsolved) bug which happens when the text is too long and produces this text-image overlap:

Is it solvable? Yes, I could play with the amount of tokens GPT-3 uses, however, I took this as an opportunity to add some humor to the Block Narrator bot. Since I can detect when this happens (because the vertical coordinates goes over a threshold value), the bot includes in the tweet that it made a mistake and that it's buggy or something like that.

Error handling

As you see, many things can go wrong but we just take it easy and log them with a bit of humor as I mentioned before. So, for example, when something fails but we don't know exactly what...well I probably know the reason by checking the errors log, but the bot still can't do that, it will alert me on Twitter with something like this:

But if the bot detects a known issue like the text overlap problem I described above, it will mention that in the tweet:

This is just a first version of the error handling. We'll keep improving it.

Results

It's still a bit early to analyze the results of this tin project because while I write this, I still haven't publicly announced the bot. It's been alive just for a couple of days and haven't had too much interaction but I can mention some things.

First, it was a super fun weekend experiment that I hope will grow. I learned a lot and I'm happy with the results. It was also a good exercise of trying to achieve something in less than 12 hours and not only live with the imperfections and buggy implementation, but assimilate that as part of the bot's personality.

Finally, the Block Narrator bot did get some interactions…from other bots! There's this thing called miner extractable value (MEV) that I was unaware of and people make MEV bots which seem to be profitable? I don't know but at least I learned something new thanks to the Block Narrator bot :)

Official links

Visit the Block Narrator's official website and Twitter account.